Update: I recently left Google DeepMind and started a faculty job in South Korea in 2025.

Hi, I'm an Assistant Professor at Yonsei University in the Department of Computer Science and Engineering, where I co-lead the Human-Data Interaction Lab.

My research focuses on addressing key challenges in responsible AI, such as model failure, bias, and safety. I take a human-centered approach, often through interactive data visualization, to help practitioners explore data and analyze model behavior. For example, our interactive tools have been extensively used at Google for model evaluation and featured at Google I/O.

Before joining Yonsei, I was a Senior Research Scientist at Google DeepMind in the People + AI Research (PAIR) team and an Assistant Professor at Oregon State University. I received my Ph.D. from Georgia Tech, advised by Polo Chau, along with a Dissertation Award.

Areas of expertise: Data Visualization, Responsible AI, Human-Computer Interaction

Research Interests

AI systems are inherently imperfect. They fail unexpectedly, reflect biases, or raise safety concerns. We believe these issues are best addressed by enabling people to actively engage with the data behind these systems. To support this, our research group develops methods and tools that help people interactively analyze such data, drawing on methods from data visualization, human-computer interaction, and responsible AI. We're particularly interested in (but not limited to):

- Evaluation of LLMs: Characterizing systematic failure modes and diverse behaviors to facilitate holistic model evaluation.

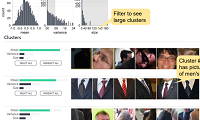

- Interactive Data Visualization: Building tools for exploring large-scale datasets (e.g., text, images, videos) to uncover insights.

- Human-in-the-Loop Red Teaming and Bias Analysis: Assessing bias, fairness, and safety risks that automated methods might miss.

- Debugging AI Agents: Visualizing AI systems to explain and control their unexpected behavior.

Note for Prospective Students

We're actively looking for graduate students and undergraduate interns. We value diverse strengths: whether your interest lies in building interfaces, analyzing data, or critically examining AI's risks, there is a place for you in our lab. You do not need to excel in all areas; we welcome those with an interest in even one of these. If you're interested, please email me your CV, transcript, and a one-page statement explaining why you'd like to work with us and what relevant experience you have. If there's a potential fit, I'll get back to you within a week regarding next steps.

Selected Publications

-

Data-Prompt Co-Evolution: Growing Test Sets to Refine LLM Behavior

CHI 2026 (Conditionally Accepted)

-

Transformer Explainer: Learning LLM Transformers with Interactive Visual Explanation and Experimentation

CHI 2026 (Conditionally Accepted)

-

SafePath: Preventing Harmful Reasoning in Chain-of-Thought via Early Alignment

NeurIPS 2025

arXiv PDF

-

LLM Comparator: Interactive Analysis of Side-by-Side Evaluation of Large Language Models

VIS 2024

DOI PDF Blog Code

Deployed on Google's LLM Evaluation Platforms

Featured at Google I/O on Gemini Open Modelsand Responsible AI Toolkit -

Adversarial Nibbler: An Open Red-Teaming Method for Identifying Diverse Harms in Text-to-Image Generation

FAccT 2024

DOI arXiv PDF Blog -

VLSlice: Interactive Vision-and-Language Slice Discovery

ICCV 2023

DOI arXiv PDF Talk Website -

Visualizing Linguistic Diversity of Text Datasets Synthesized by Large Language Models

VIS 2023 (Short)

DOI arXiv PDF Code -

DendroMap: Visual Exploration of Large-Scale Image Datasets for Machine Learning with Treemaps

VIS 2022

DOI arXiv PDF Twitter Post Demo

Employment

-

Yonsei University, Seoul, South Korea

2025 - present

Assistant Professor, Department of Computer Science and Engineering, College of Computing -

Google, Atlanta, GA

2022-2025

Senior Research Scientist, People+AI Research (PAIR) Team, Google DeepMind -

Oregon State University, Corvallis, OR

2019-2022

Assistant Professor of Computer Science, School of Electrical Engineering and Computer Science -

Google, Cambridge, MA

Summer 2017

Software Engineering Intern, People+AI Research (PAIR) Team, Google Brain -

Facebook, Menlo Park, CA

Summer 2016

Research Intern, Applied ML Research Group -

Facebook, Menlo Park, CA

Summer 2015

Research Intern, Applied ML Research Group

Education

-

Ph.D. in Computer Science,

Georgia Institute of Technology, Atlanta, GA

2013-2019

Thesis: Human-Centered AI through Scalable Visual Data Analytics

Committee: Polo Chau (Advisor), Sham Navathe, Alex Endert, Martin Wattenberg, and Fernanda Viégas -

M.S. in Computer Science and Engineering,

Seoul National University, South Korea

2009-2011

Thesis: Context-Aware Recommendation using Learning-to-Rank (Advisor: Sang-goo Lee) - B.S. in Electrical and Computer Engineering, Seoul National University, South Korea 2005-2009

Awards

- College of Computing Dissertation Award, Georgia Tech 2021

- Finalist, Facebook Research Award 2021

- ACM Trans. Interactive Intelligent Systems (TiiS) 2018 Best Paper, Honorable Mention 2020

- Google PhD Fellowship, Google AI 2018-2019

- Graduate TA of the Year in School of Computer Science, Georgia Tech 2018

- NSF Graduate Research Fellowship, National Science Foundation 2014-2017

- Best Paper Award, PhD Workshop at CIKM 2011

- National Scholarship for Science and Engineering, Korea Student Aid Foundation 2005-2009

Teaching

- Yonsei University CSI 6305. Responsible AI, Spring 2026

- CAS 4150. Introduction to Data Visualization, Spring 2026

- GCB 6114. Data Visualization, Fall 2025

- CAS 4102. AI Capstone Design, Fall 2025

- CSI 7110. Topics in Responsible AI, Spring 2025

- CAS 4150. Introduction to Data Visualization, Spring 2025

- Oregon State Univ. CS 499/549. Visual Analytics, 2022

- CS 565. Human-Computer Interaction, 2020-2022

- CS 539. Data Visualization for Machine Learning, 2020

Student Advising

-

Yonsei University

Minjae Lee (M.S. Student, 2025-)

Sarang Choi (Undergraduate Intern, 2025-)

Yejin Kim (Undergraduate Intern, 2025-)

Minji Jung (Intern, 2025-)

Dasol Choi (Project Advising, 2025) -

Oregon State Univ.

Eric Slyman (Ph.D, 2021-25) (now at Adobe Research)

Montaser Hamid (Ph.D Student, 2021-22)

Yashwanthi Anand (Ph.D Student, 2021-22)

Delyar Tabatabai (M.S, 2020-22) (now at Apple)

Anita Ruangrotsakun (M.S, 2020-22) (now at Momento)

Dayeon Oh (M.S, 2020-22) (now at LG)

Roli Khanna (M.S, 2020-21) (now at Expedia)

Donny Bertucci (B.S, 2020-22) (started as a PhD student at Georgia Tech; now at Axiom Bio)

Mark Ser (B.S, 2020-21) (now at Microsoft)

Kristina Lee (B.S, 2020-21) (now at Oracle)

Thuy-Vy Nguyen (B.S, 2020-21) (now at Oracle)

Invited Talks

-

Keynote, SIGCHI Korea Local Chapter Summer Event, KAIST, 2025

Keynote, 2nd Korea Visualization Workshop (K-VIS), Seoul National University, 2024

Invited Talk, KAIST Graduate School of AI, 2024

Panel Speaker, Google Global PhD Fellowship Summit, 2023

See my CV for more.

Professional Service

-

Conf. Organization

VIS 2024-26 (Publication Chairs)

IDEA@KDD 2018 (Workshop Organiziers)

WSDM 2016 (Web) -

Conf. PC

VIS (2020-present)

IUI (2019-present)

AAAI (2021-22)

SDM (2020)

WSDM (2022 Demo)

CIKM (2019 Demo) -

Journal Reviewers

IEEE Transactions on Visualization and Computer Graphics (TVCG)

ACM Transactions on Interactive Intelligent Systems (TiiS)

ACM Transactions on Computer-Human Interaction (TOCHI)

ACM Transactions on Intelligent Systems and Technology (TIST)

Distill - Conf. Reviewers CHI (2014, 17-18, 21-22, 24-26), FAccT (2026), UIST (2023), CSCW (2020), VIS (2018-20), EuroVis (2018, 25), KDD (2014-16), SDM (2014, 16-17), IUI (2016), RecSys (2016), SIGMOD (2013), DASFAA (2011)

-

Grant Reviewers

NSF Review Panelists

(CISE III)

Google Academic Research Awards

Bio

Minsuk Kahng is an Assistant Professor in the Department of Computer Science and Engineering

at Yonsei University in South Korea.

His research aims to empower researchers and practitioners to gain insights through

interactive data visualization, enabling the responsible development of AI systems.

To achieve this, he builds novel visual analytics tools that help these people

interpret model behavior and explore large datasets.

Kahng publishes papers at the top venue in the field of data visualization (IEEE VIS),

as well as at premier conferences in the field of AI, Human-Computer Interaction, and Responsible Computing.

His research has led to deployed technologies (e.g., LLM Comparator for Google, ActiVis for Facebook)

and been recognized by prestigious awards,

including a Google PhD Fellowship and an NSF Graduate Research Fellowship,

and supported by NSF, DARPA, Google, and NAVER.

Before joining Yonsei, Minsuk was a Senior Research Scientist at Google DeepMind

in the People + AI Research (PAIR) team and an Assistant Professor at Oregon State University.

He received his Ph.D. from Georgia Tech with a Dissertation Award.

Website: https://minsuk.com

CV: https://minsuk.com/minsuk-kahng-cv.pdf